In my last post I described how to detect speech commands in continuous audio signals. One important ingredient was a good amount of labeled data. Quite early on, it became clear to me that an efficient labeling interface would be extremely helpful. The first iteration of that interface was a simple command-line app, quickly to be replaced by an ipywidgets-based interface.

Now, it offers a graphical user interface directly inside Jupyter notebooks and is easily adaptable to various types of data, such as text, images, or audio. The code is designed to be flexible and simple to use. For example, annotating a set of images is as easy as

>>> from chmp.label import annotate

>>> annotations = annotate(

... ['./data/img_circle.png', './data/img_square.png'],

... classes=['square', 'circle'],

... cls='image',

... )

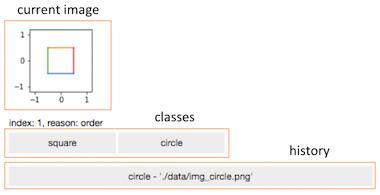

This call will immediately display a widget showing the current image with a list of buttons to select one of the passed classes, similar to

After clicking on a class button, the current image is annotated and added to the history. Immediately the next image will be displayed. As the user interacts with the widget, their selections are added to the annotations object. After three clicks, it could look like

>>> annotations

[{'index': 0, 'item': '0.png', 'label': 'circle', 'reason': 'order'},

{'index': 1, 'item': '1.png', 'label': 'circle', 'reason': 'order'},

{'index': 2, 'item': '2.png', 'label': 'circle', 'reason': 'order'}]

In case of error, it is possible to revisit already labeled images by clicking

on the corresponding item in the history. After the new label has been selected,

the annotations object will contain both the original label and the re-assigned

label. Assume 0.png is re-labeled. In that case, the annotations object will

contain two entries for it, similar to

>>> annotations

[{'index': 0, 'item': '0.png', 'label': 'circle', 'reason': 'order'},

...,

{'index': 3, 'item': '0.png', 'label': 'square', 'reason': 'repeat'}]

To only get the latest annotation for an item, use the .get_latest() method of

the annotations object, as in

>>> annotations.get_latest()

[{'index': 3, 'item': '0.png', 'label': 'square', 'reason': 'repeat'},

{'index': 2, 'item': '2.png', 'label': 'circle', 'reason': 'order'},

{'index': 1, 'item': '1.png', 'label': 'circle', 'reason': 'order'}]

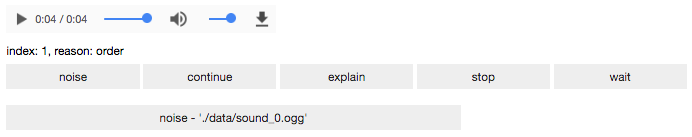

annotate can not only handle images, but also audio files. Simply change the

cls parameter in the annotate call to cls='audio'. Now, the widget will

show audio controls instead of images. For my

experiment with speech commands, it

looks similar to

For more advanced needs, the display mechanism can easily be adapted by passing a function to convert an item to its HTML representation. For example, to show the image file name in red, use

>>> annotations = annotate(

... ['./data/img_circle.png', './data/img_square.png'],

... display_value=lambda item: (

... f'<span style="color: #f00;">image: {item}</span>'

... ),

... classes=['square', 'circle'],

... )

In case this short description got you interested, please have a look at the

code. If you have any

questions or comments, I would love to hear from you on twitter @c_prohm.